The Dark Side of Reddit: Unmasking the Terrorist Propaganda Pipeline

Ashley Rindsberg Uncovers Coordinated Anti-Capitalist, Radical Marxist, and Islamist Propaganda in Major Subreddits

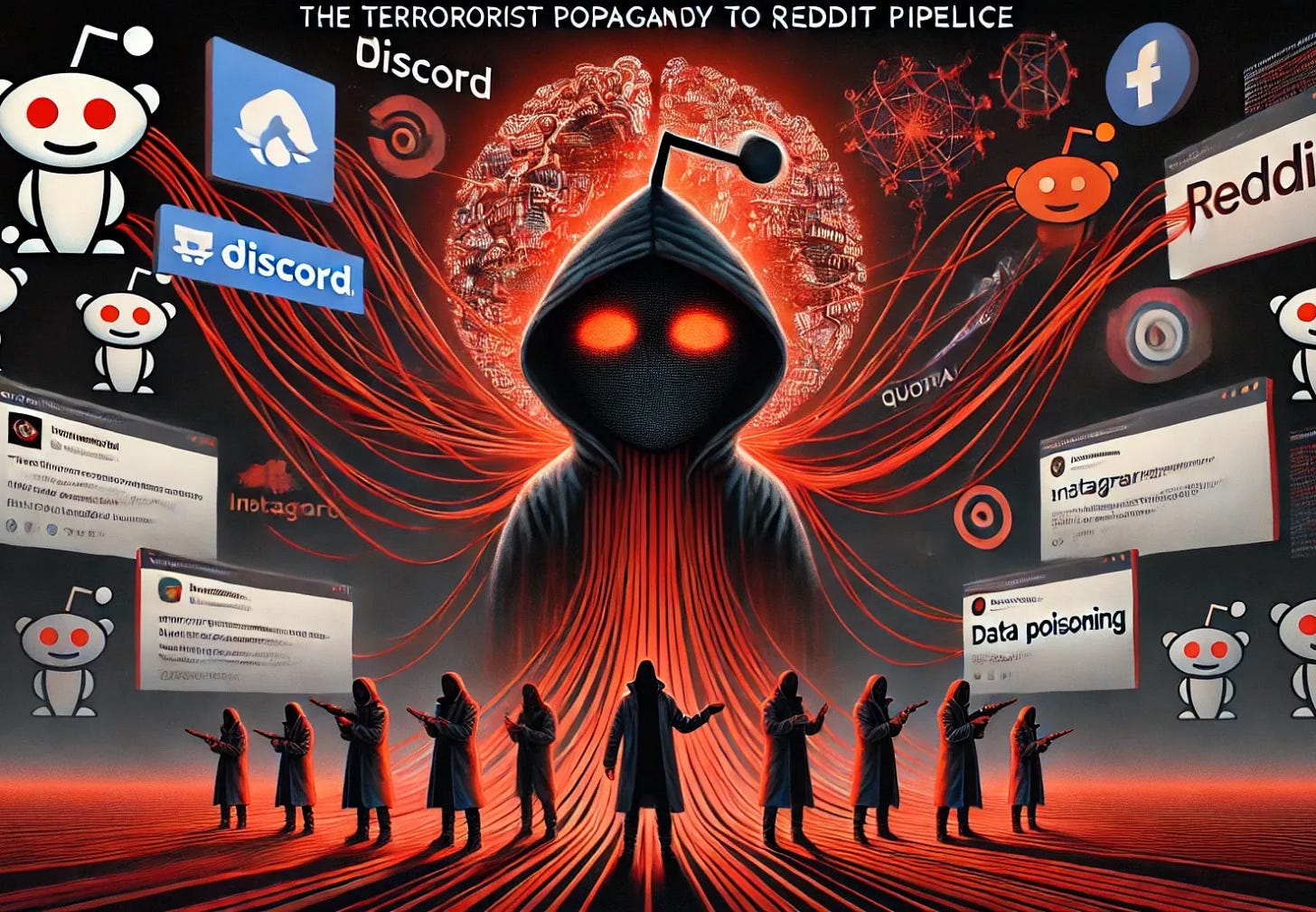

In the sprawling universe of social media, Reddit stands as one of the internet’s most influential platforms, where communities form around shared interests, niche topics, and global issues. But as Ashley Rindsberg’s recent investigative piece, The Terrorist Propaganda to Reddit Pipeline, reveals, this openness has been exploited by extremist networks, morphing Reddit into a covert channel for laundering propaganda from US-designated terrorist organizations.

The Infiltration of Reddit by Extremist Networks

Rindsberg’s investigation exposes a coordinated network, centered around the 270,000-member subreddit r/Palestine, that systematically launders propaganda from groups like Hamas, Hezbollah, and Palestinian Islamic Jihad. The network operates not just on Reddit but spans Discord, X, Instagram, Quora, and Wikipedia, manipulating search engines and AI models through a tactic known as “data poisoning,” where malicious actors flood platforms with misleading or biased content to influence AI training data and search engine rankings, ultimately spreading propaganda more widely and making it appear credible

What makes this network particularly dangerous is its strategic infiltration of massive, non-political subreddits such as r/Documentaries (20 million members), r/PublicFreakout (4.7 million), and r/therewasanattempt (7.2 million). By controlling these communities, the network misleads millions of users into consuming content that appears organic but is, in reality, part of a carefully orchestrated propaganda campaign.

Data Poisoning: Manipulating AI and Search Engines

One of the most alarming revelations is how this network leverages data poisoning to manipulate AI models and search engine algorithms. With Reddit’s $60 million content licensing deal with Google and its data-sharing partnership with OpenAI, Reddit posts—especially those with high upvotes—are used in training datasets for large language models like ChatGPT.

This creates a feedback loop where manipulated content is not only spread across social media but also influences AI outputs and search engine results. For instance, searches on Google related to Israeli hostages were topped by manipulated posts from r/Palestine, overshadowing credible sources.

The Resistance News Network (RNN) and Content Laundering

At the heart of this propaganda pipeline lies the Resistance News Network (RNN), a Telegram-based aggregator that translates and republishes content from terror-affiliated channels. RNN pulls from a network of banned terrorist organizations, rebranding their messaging and funneling it into Western platforms like Reddit.

RNN’s role is not merely passive aggregation. It actively works with groups like Samidoun—a US-designated terror entity—to spread content across the web. This allows terror organizations to bypass bans on platforms like Telegram and legally restricted content zones in Western countries.

Reddit’s Lax Moderation and Legal Blind Spots

Despite being repeatedly warned about these activities, Reddit’s trust and safety team has failed to act decisively. According to Rindsberg’s sources, internal reports and user alerts were ignored or dismissed, with some Reddit executives even mocking concerns about vote brigading and content manipulation.

This hands-off approach poses serious legal and ethical risks. Under US law, spreading propaganda or recruitment materials from designated terror groups can be classified as “material support,” which is not protected under the First Amendment. Platforms like Reddit could face criminal liability if they knowingly allow such content to proliferate.

The Astroturfing Tactics: Creating Illusions of Public Opinion

The network’s strategy goes beyond simple content posting. Through astroturfing—a method of creating the false appearance of grassroots support—it uses subreddit recommendations, pinned posts, and vote brigading to manufacture the illusion of widespread anti-Israel and anti-Western sentiment.

Subreddits like r/Documentaries funnel users into increasingly radical spaces, creating an ideological pipeline where users, often unaware, are exposed to extremist narratives. This slow radicalization process is amplified by the platform’s algorithmic promotion of highly upvoted content, giving manipulated posts a veneer of credibility.

Antisemitism on Reddit: Addressing Moderator Concerns

The ADL’s Online Hate and Harassment: The American Experience 2024 report sheds light on the broader issue of antisemitism on Reddit. Moderators of Jewish-focused subreddits have faced overwhelming antisemitic abuse, especially following the October 7th Hamas attacks. Despite the ADL’s engagement with Reddit, many moderators report insufficient support and continued harassment.

Reddit has taken some steps in response to these concerns, such as providing refresher training on hate speech policies, including antisemitism, onboarding Jewish community representatives into its Partner Communities Program, and instituting new workflows to ensure identity-based concerns are reliably escalated.

However, moderators still face significant challenges, including targeted harassment, intentional misuse of reporting tools, and antagonistic subreddits that retaliate against Jewish users and their allies.

AI, National Security, & Content Moderation

The weaponization of data poisoning threatens national security, public discourse, and democratic processes. As AI models like ChatGPT increasingly influence information consumption, the risks of data poisoning grow exponentially. Manipulated datasets can embed biased narratives deep into AI systems, leading to outputs that unknowingly echo extremist propaganda. The revelations in The Terrorist Propaganda to Reddit Pipeline demand a reevaluation of how platforms like Reddit monitor and moderate content.