Israeli Startup Q.ai Acquired by Apple in Estimated $1.6 Billion Deal

Q.ai takes human communication to the next level developing technology that reads facial micro-movements to process whispered and silent speech

In a landmark acquisition announced last Thursday, Apple confirmed it has purchased Israeli startup Q.ai for approximately $1.6 billion, marking the tech giant’s second-largest acquisition in history. The three-year-old company, which employs approximately 100 people, has developed revolutionary technology that can interpret human speech without any audible sound, potentially allowing users to control devices through thought alone.

The acquisition reunites Apple with Q.ai CEO Aviad Maizels, whose previous company PrimeSense was acquired by Apple in 2013 and later became the foundation for Face ID technology. Expressing mutual excitement, Maizels spoke of “extraordinary possibilities for pushing boundaries,” while Apple’s hardware chief Johny Srouji praised Q.ai’s innovative approach to imaging and machine learning.

A New Era of Human-Computer Interaction

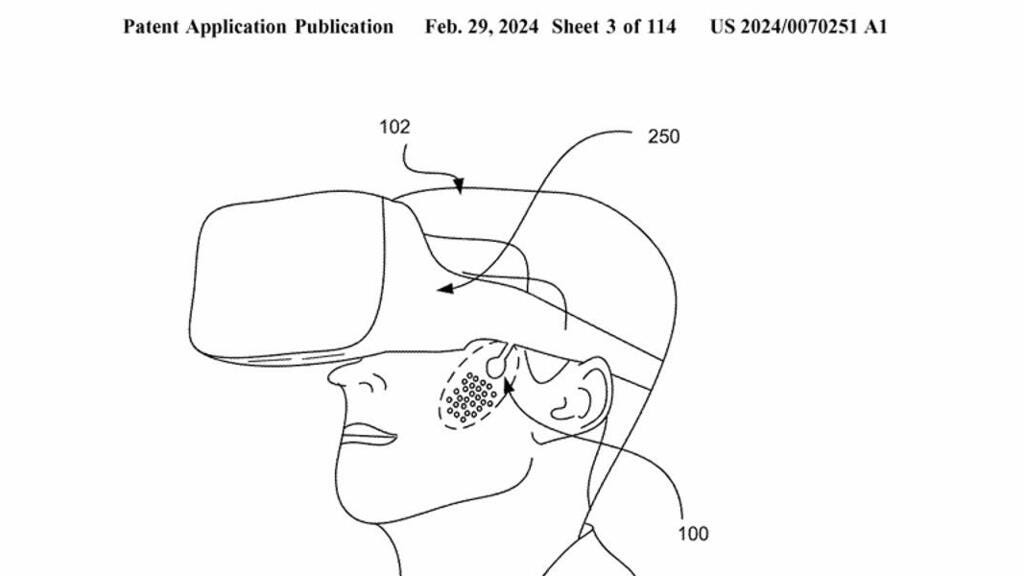

The breakthrough technology works by detecting and interpreting microscopic movements of facial muscles, skin, and jaw that occur during internal speech or whispering. These movements, invisible to the human eye, are captured through advanced computer vision sensors and translated into words and commands that devices can understand and execute.

Unlike existing solutions in the field of silent speech interfaces (SSI), Q.ai’s innovation appears to eliminate the need for physical sensors attached to the body. Previous technologies, including MIT’s AlterEgo system and Israeli company xTrodes’ adhesive patches, required users to wear sensors on their jaw, neck, or facial muscles to detect the electrical signals passing through muscles during speech.

Q.ai’s optical approach represents a significant leap forward, potentially enabling seamless integration into existing consumer devices like smartphones, headphones, and augmented reality headsets without requiring users to wear additional hardware.

The technology also offers unprecedented noise reduction capabilities. By focusing on the physical movements associated with speech production rather than sound waves, the system can perfectly isolate a user’s intended communication from background noise, a feature that could transform phone calls in noisy environments.

Strategic Implications for Apple’s Product Ecosystem

Industry analysts suggest the acquisition could dramatically enhance several of Apple’s flagship products and services. Siri, Apple’s voice assistant, could become far more capable and responsive, understanding user intentions even in situations where speaking aloud is impractical or impossible. The Apple Watch and Vision Pro headset stand to benefit significantly, allowing users to interact with these devices through subtle facial movements or internal speech alone.

Tom Hulme, managing partner at Google Ventures and one of Q.ai’s early investors, framed the development in revolutionary terms. “For decades, we were forced to speak the language of machines, learning to type, click and swipe,” he said. “We believe we are in the middle of a new technological revolution, a period in which the machine finally learns to understand us.”

Growing Competition in Neural Interface Technology

Apple’s move comes amid intensifying competition in the neural interface and silent speech technology sector. Meta’s Reality Labs acquired CTRL-Labs, a company focused on monitoring muscle signals for similar applications. NASA has been developing sub-vocal speech systems to enable astronauts to communicate in extremely noisy environments or inside specialized helmets where conventional microphones fail.

The technology has also attracted attention from defense establishments worldwide. Dr. Alona Barnea, head of the neurotechnology department at MAFAT, Israel’s equivalent of the U.S. Defense Advanced Research Projects Agency, highlighted the operational significance: “Think about the operational implications when there is a need for silent communication or activity in a noisy area.”

Democratizing Communication

While Q.ai has maintained near-complete secrecy about its product throughout its short existence, the company’s website hints at ambitious goals including “extremely high bandwidth, unprecedented privacy, accessibility, multilingualism and more.” The technology could prove transformative for individuals with speech disabilities or those working in environments where verbal communication is challenging.

I understand its business but to sell something so powerful with so many potential applications to a LIBERAL ISRAEL/JEW HATING MONOLITH AS APPLE. G-d HELP US

Fascinating read on the optical approach vs wearable sensors. The elimination of physical contact points is kinda a gamechanger for mainstream adoption since most SSI tech has been held back by the awkwardness of wearing electrode patches. Back when I tested AlterEgo prototypes, the jaw sensor was noticable enough that it felt like a barrier to everday use. Q.ai solving this with just computer vision sensors means we might actualy see this in iPhones within 2 years.